My First Open Source Ai Contribution: Fixing Laravel Prismphp’s Ollama Stream Bug

Last updated on

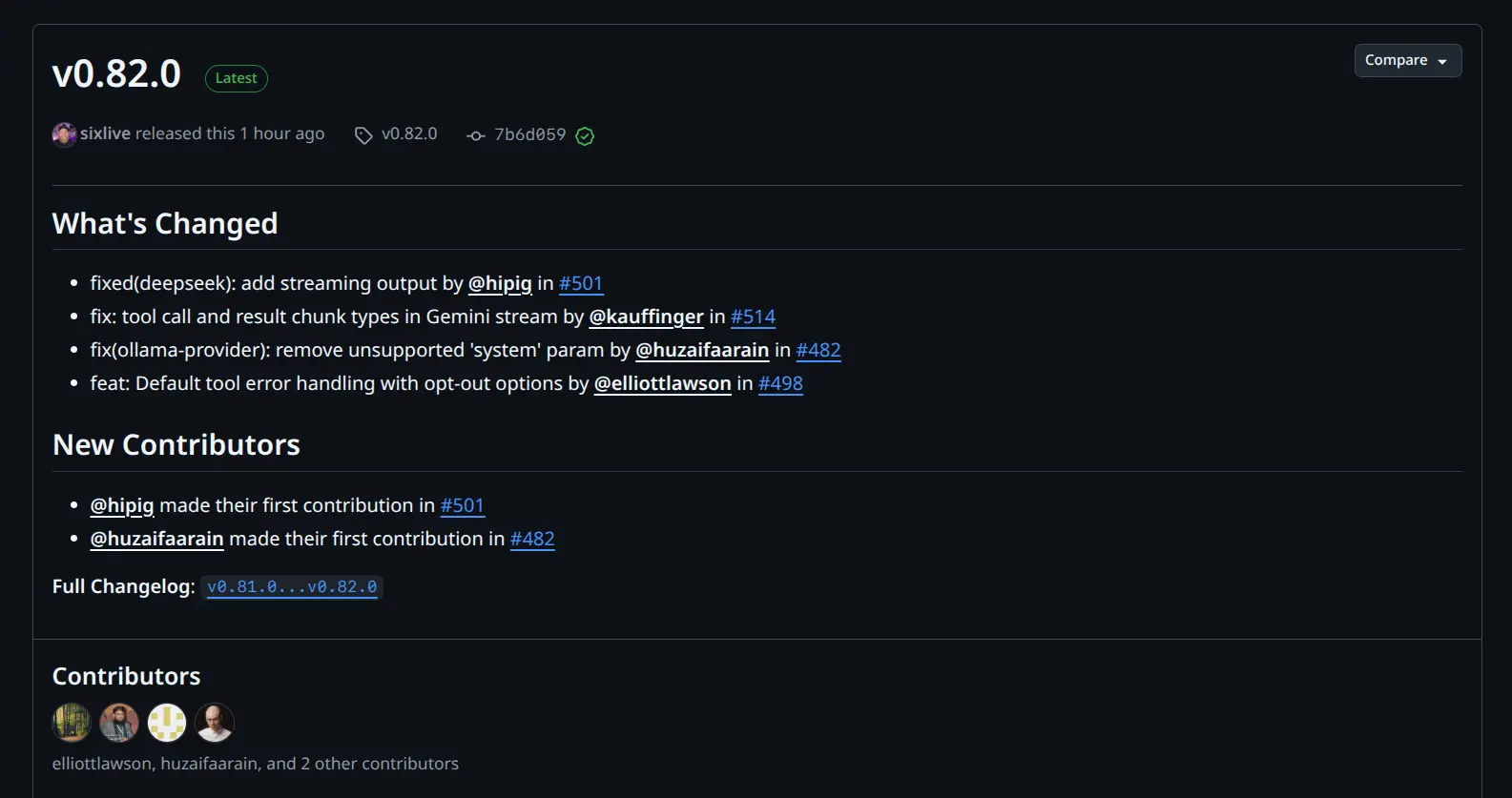

Another day, another achievement. I won’t hype it too much, but let’s be real—there’s something incredibly satisfying about seeing your small code contribution go live in a tool used by thousands, if not millions.

I don’t usually rely on paid AI services. Instead, I prefer running local LLMs like DeepSeek and LLaMA using Ollama—they’re free, fast, and my Dell G-series machine with 6GB GPU + 12-core CPU handles them just fine.

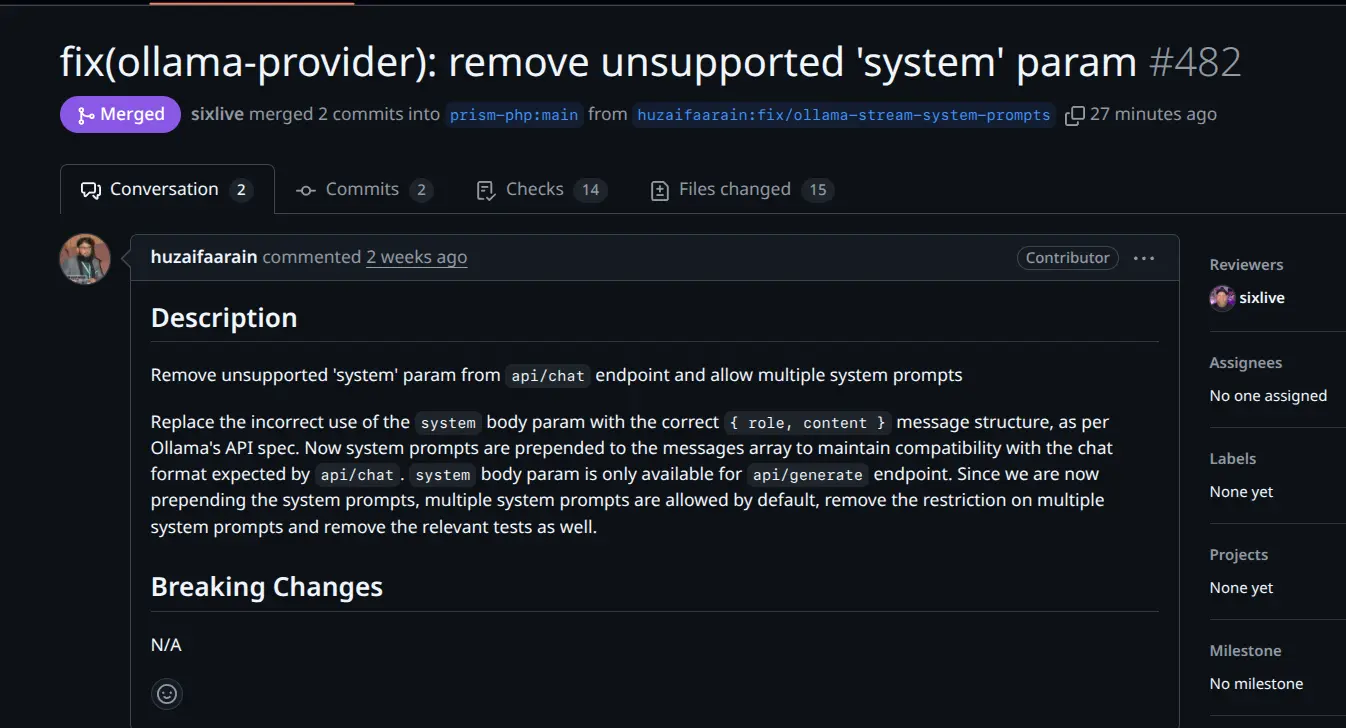

Not long ago, I built a Laravel tool for myself using Laravel’s native HTTP client that talks directly with Ollama. While testing, I stumbled across a bug in the prismphp.com package—specifically in Stream.php. The system param was being passed to the /api/chat endpoint, which the Ollama spec clearly doesn’t support.

What next? My developer instinct kicked in—I jumped into the package source, fixed the issue, updated the tests, forked the repo, submitted the PR... and today I got the confirmation that my PR has been merged into the official repo. 🎉

Here's the diff in case if you want to review:

1From d2789d809490380590fc586bed0569a986456b78 Mon Sep 17 00:00:00 2001 2From: Muhammad Huzaifa <huzaifa.itgroup@gmail.com> 3Date: Wed, 9 Jul 2025 18:28:13 +0500 4Subject: [PATCH] fix(ollama-provider): remove unsupported 'system' param from 5 `api/chat` endpoint and allow multiple system prompts 6 7Replace the incorrect use of the `system` body param with the correct `{ role, content }` message structure, as per Ollama's API spec. Now system prompts are prepended to the messages array to maintain compatibility with the chat format expected by `api/chat`. `system` body param is only available for `api/generate` endpoint. Since we are now prepending the system prompts, multiple system prompts are allowed by default, remove the restriction on multiple system prompts and remove the relevant tests as well. 8--- 9 src/Providers/Ollama/Handlers/Stream.php | 10 +++---- 10 src/Providers/Ollama/Handlers/Structured.php | 17 +++++------ 11 src/Providers/Ollama/Handlers/Text.php | 10 +++---- 12 tests/Providers/Ollama/StructuredTest.php | 30 -------------------- 13 tests/Providers/Ollama/TextTest.php | 14 --------- 14 5 files changed, 15 insertions(+), 66 deletions(-) 15 16diff --git a/src/Providers/Ollama/Handlers/Stream.php b/src/Providers/Ollama/Handlers/Stream.php 17index bb8ae38..5b27815 100644 18--- a/src/Providers/Ollama/Handlers/Stream.php 19+++ b/src/Providers/Ollama/Handlers/Stream.php 20@@ -174,17 +174,15 @@ class Stream 21 22 protected function sendRequest(Request $request): Response 23 { 24- if (count($request->systemPrompts()) > 1) { 25- throw new PrismException('Ollama does not support multiple system prompts using withSystemPrompt / withSystemPrompts. However, you can provide additional system prompts by including SystemMessages in with withMessages.'); 26- } 27- 28 return $this 29 ->client 30 ->withOptions(['stream' => true]) 31 ->post('api/chat', [ 32 'model' => $request->model(), 33- 'system' => data_get($request->systemPrompts(), '0.content', ''), 34- 'messages' => (new MessageMap($request->messages()))->map(), 35+ 'messages' => (new MessageMap(array_merge( 36+ $request->systemPrompts(), 37+ $request->messages() 38+ )))->map(), 39 'tools' => ToolMap::map($request->tools()), 40 'stream' => true, 41 'options' => Arr::whereNotNull(array_merge([ 42diff --git a/src/Providers/Ollama/Handlers/Structured.php b/src/Providers/Ollama/Handlers/Structured.php 43index 0b95cf7..e7bae61 100644 44--- a/src/Providers/Ollama/Handlers/Structured.php 45+++ b/src/Providers/Ollama/Handlers/Structured.php 46@@ -6,7 +6,6 @@ namespace Prism\Prism\Providers\Ollama\Handlers; 47 48 use Illuminate\Http\Client\PendingRequest; 49 use Illuminate\Support\Arr; 50-use Prism\Prism\Exceptions\PrismException; 51 use Prism\Prism\Providers\Ollama\Concerns\MapsFinishReason; 52 use Prism\Prism\Providers\Ollama\Concerns\ValidatesResponse; 53 use Prism\Prism\Providers\Ollama\Maps\MessageMap; 54@@ -76,20 +75,18 @@ class Structured 55 */ 56 protected function sendRequest(Request $request): array 57 { 58- if (count($request->systemPrompts()) > 1) { 59- throw new PrismException('Ollama does not support multiple system prompts using withSystemPrompt / withSystemPrompts. However, you can provide additional system prompts by including SystemMessages in with withMessages.'); 60- } 61- 62 $response = $this->client->post('api/chat', [ 63 'model' => $request->model(), 64- 'system' => data_get($request->systemPrompts(), '0.content', ''), 65- 'messages' => (new MessageMap($request->messages()))->map(), 66+ 'messages' => (new MessageMap(array_merge( 67+ $request->systemPrompts(), 68+ $request->messages() 69+ )))->map(), 70 'format' => $request->schema()->toArray(), 71 'stream' => false, 72 'options' => Arr::whereNotNull(array_merge([ 73- 'temperature' => $request->temperature(), 74- 'num_predict' => $request->maxTokens() ?? 2048, 75- 'top_p' => $request->topP(), 76+ 'temperature' => $request->temperature(), 77+ 'num_predict' => $request->maxTokens() ?? 2048, 78+ 'top_p' => $request->topP(), 79 ], $request->providerOptions())), 80 ]); 81 82diff --git a/src/Providers/Ollama/Handlers/Text.php b/src/Providers/Ollama/Handlers/Text.php 83index f4777a2..b167da6 100644 84--- a/src/Providers/Ollama/Handlers/Text.php 85+++ b/src/Providers/Ollama/Handlers/Text.php 86@@ -68,16 +68,14 @@ class Text 87 */ 88 protected function sendRequest(Request $request): array 89 { 90- if (count($request->systemPrompts()) > 1) { 91- throw new PrismException('Ollama does not support multiple system prompts using withSystemPrompt / withSystemPrompts. However, you can provide additional system prompts by including SystemMessages in with withMessages.'); 92- } 93- 94 $response = $this 95 ->client 96 ->post('api/chat', [ 97 'model' => $request->model(), 98- 'system' => data_get($request->systemPrompts(), '0.content', ''), 99- 'messages' => (new MessageMap($request->messages()))->map(),100+ 'messages' => (new MessageMap(array_merge(101+ $request->systemPrompts(),102+ $request->messages()103+ )))->map(),104 'tools' => ToolMap::map($request->tools()),105 'stream' => false,106 'options' => Arr::whereNotNull(array_merge([107diff --git a/tests/Providers/Ollama/StructuredTest.php b/tests/Providers/Ollama/StructuredTest.php108index 2630a8f..afb0ced 100644109--- a/tests/Providers/Ollama/StructuredTest.php110+++ b/tests/Providers/Ollama/StructuredTest.php111@@ -53,33 +53,3 @@ it('returns structured output', function (): void {112 expect($response->structured['open_source'])->toBeArray();113 114 });115-116-it('throws an exception with multiple system prompts', function (): void {117- Http::preventStrayRequests();118-119- $schema = new ObjectSchema(120- 'output',121- 'the output object',122- [123- new StringSchema('name', 'The users name'),124- new ArraySchema('hobbies', 'a list of the users hobbies',125- new StringSchema('name', 'the name of the hobby'),126- ),127- new ArraySchema('open_source', 'The users open source contributions',128- new StringSchema('name', 'the name of the project'),129- ),130- ],131- ['name', 'hobbies', 'open_source']132- );133-134- $response = Prism::structured()135- ->using('ollama', 'qwen2.5:14b')136- ->withSchema($schema)137- ->withSystemPrompts([138- new SystemMessage('MODEL ADOPTS ROLE of [PERSONA: Nyx the Cthulhu]!'),139- new SystemMessage('But my friends call my Nyx.'),140- ])141- ->withPrompt('Who are you?')142- ->asStructured();143-144-})->throws(PrismException::class, 'Ollama does not support multiple system prompts using withSystemPrompt / withSystemPrompts. However, you can provide additional system prompts by including SystemMessages in with withMessages.');145diff --git a/tests/Providers/Ollama/TextTest.php b/tests/Providers/Ollama/TextTest.php146index 505bf39..2a4d4f4 100644147--- a/tests/Providers/Ollama/TextTest.php148+++ b/tests/Providers/Ollama/TextTest.php149@@ -167,17 +167,3 @@ describe('Image support', function (): void {150 });151 });152 });153-154-it('throws an exception with multiple system prompts', function (): void {155- Http::preventStrayRequests();156-157- $response = Prism::text()158- ->using('ollama', 'qwen2.5:14b')159- ->withSystemPrompts([160- new SystemMessage('MODEL ADOPTS ROLE of [PERSONA: Nyx the Cthulhu]!'),161- new SystemMessage('But my friends call my Nyx.'),162- ])163- ->withPrompt('Who are you?')164- ->asText();165-166-})->throws(PrismException::class, 'Ollama does not support multiple system prompts using withSystemPrompt / withSystemPrompts. However, you can provide additional system prompts by including SystemMessages in with withMessages.');167--1682.34.1

🚀 Why This Matters

This wasn’t just a bugfix. It was a reminder that real-world contributions come from curiosity and the will to improve what you use. Whether it’s Laravel, Ollama, or a lesser-known AI package, fixing one small piece can help the whole community.

🔗 Let’s Build Something Together

If you’re looking for someone who not only builds robust systems but also dives deep into debugging, fixing, and contributing back to the community—I’m your guy.

👉 Visit my Upwork Profile to see what I’ve done, what I can do, and how we can work together. Let's build tools that don’t just work—they improve others’ work too.